مزودو الترجمة

يدعم أداة AI Localization Automator خمسة مزودين مختلفين للذكاء الاصطناعي، لكل منهم نقاط قوة وخيارات تكوين فريدة. اختر المزود الذي يناسب احتياجات مشروعك وميزانيته ومتطلبات الجودة بشكل أفضل.

Ollama (الذكاء الاصطناعي المحلي)

الأفضل لـ: المشاريع الحساسة للخصوصية، الترجمة دون اتصال، استخدام غير محدود

يعمل Ollama بنماذج الذكاء الاصطناعي محليًا على جهازك، مما يوفر خصوصية وسيطرة كاملة دون تكاليف واجهة برمجة التطبيقات (API) أو متطلبات اتصال بالإنترنت.

النماذج الشائعة

- llama3.2 (موصى به للأغراض العامة)

- mistral (بديل فعال)

- codellama (ترجمات واعية للكود)

- والعديد من النماذج المجتمعية الأخرى

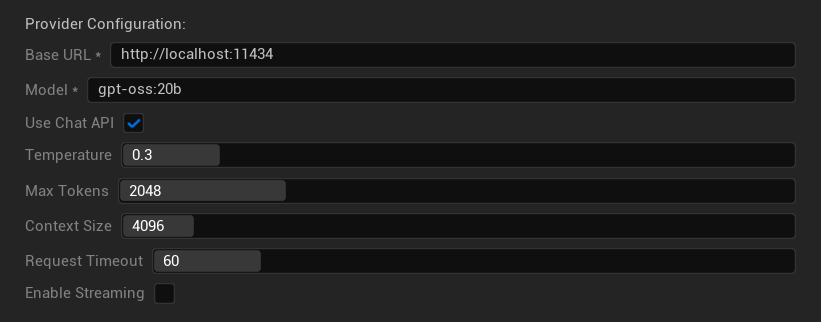

خيارات التكوين

- عنوان URL الأساسي: خادم Ollama المحلي (الافتراضي:

http://localhost:11434) - النموذج: اسم النموذج المثبت محليًا (مطلوب)

- استخدام واجهة برمجة تطبيقات الدردشة: تمكين لمعالجة محادثة أفضل

- درجة الحرارة (Temperature): 0.0-2.0 (يوصى بـ 0.3)

- الرموز القصوى (Max Tokens): 1-8,192 رمزًا

- حجم السياق (Context Size): 512-32,768 رمزًا

- مهلة الطلب (Request Timeout): 10-300 ثانية (النماذج المحلية يمكن أن تكون أبطأ)

- تمكين البث (Enable Streaming): لمعالجة الاستجابة في الوقت الفعلي

نقاط القوة

- ✅ خصوصية كاملة (لا تترك البيانات جهازك)

- ✅ لا توجد تكاليف واجهة برمجة تطبيقات (API) أو حدود استخدام

- ✅ يعمل دون اتصال

- ✅ سيطرة كاملة على معاملات النموذج

- ✅ مجموعة واسعة من النماذج المجتمعية

- ✅ لا يوجد تقييد بمزود معين

الاعتبارات

- 💻 يتطلب إعدادًا محليًا وأجهزة قادرة

- ⚡ أبطأ بشكل عام من مزودي السحابة

- 🔧 يتطلب إعدادًا تقنيًا أكثر

- 📊 تختلف جودة الترجمة بشكل كبير حسب النموذج (بعضها يمكن أن يتفوق على مزودي السحابة)

- 💾 متطلبات تخزين كبيرة للنماذج

إعداد Ollama

- تثبيت Ollama: قم بالتنزيل من ollama.ai وقم بالتثبيت على نظامك

- تنزيل النماذج: استخدم

ollama pull llama3.2لتنزيل النموذج الذي اخترته - بدء الخادم: يعمل Ollama تلقائيًا، أو ابدأه باستخدام

ollama serve - تكوين الإضافة: حدد عنوان URL الأساسي واسم النموذج في إعدادات الإضافة

- اختبار الاتصال: ستحقق الإضافة إمكانية الاتصال عند تطبيق التكوين

OpenAI

الأفضل لـ: أعلى جودة ترجمة بشكل عام، مجموعة واسعة من النماذج

يوفر OpenAI نماذج لغة رائدة في المجال من خلال واجهة برمجة التطبيقات (API) الخاصة بهم، بما في ذلك أحدث نماذج GPT وتنسيق واجهة برمجة تطبيقات الردود الجديد.

النماذج المتاحة

- gpt-5 (أحدث نموذج رئيسي)

- gpt-5-mini (بديل أصغر وأسرع)

- gpt-4.1 و gpt-4.1-mini

- gpt-4o و gpt-4o-mini (نماذج مُحسنة)

- o3 و o3-mini (استدلال متقدم)

- o1 و o1-mini (الجيل السابق)

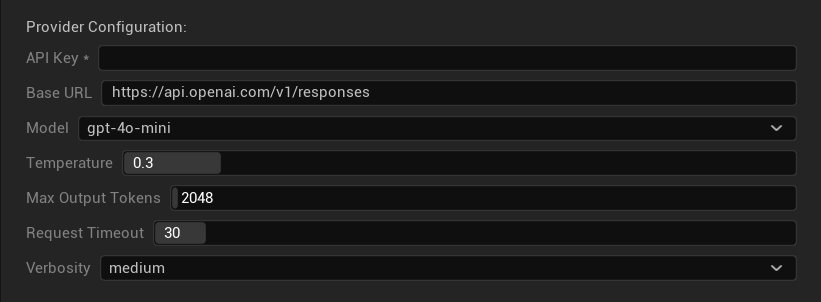

خيارات التهيئة

- مفتاح API: مفتاح OpenAI API الخاص بك (مطلوب)

- عنوان URL الأساسي: نقطة نهاية API (الافتراضي: خوادم OpenAI)

- النموذج: اختر من بين نماذج GPT المتاحة

- درجة الحرارة (Temperature): 0.0-2.0 (يُوصى بـ 0.3 لتحقيق اتساق في الترجمة)

- الحد الأقصى لعدد الرموز المخرجة (Max Output Tokens): 1-128,000 رمز

- مهلة الطلب (Request Timeout): 5-300 ثانية

- التفصيل (Verbosity): التحكم في مستوى تفاصيل الاستجابة

نقاط القوة

- ✅ ترجمات عالية الجودة باستمرار

- ✅ فهم ممتاز للسياق

- ✅ الحفاظ القوي على التنسيق

- ✅ دعم واسع للغات

- ✅ وقت تشغيل API موثوق

الاعتبارات

- 💰 تكلفة أعلى لكل طلب

- 🌐 يتطلب اتصالاً بالإنترنت

- ⏱️ حدود الاستخدام تعتمد على المستوى

Anthropic Claude

الأفضل لـ: الترجمات الدقيقة، المحتوى الإبداعي، التطبيقات التي تركز على السلامة

تتفوق نماذج Claude في فهم السياق والفروق الدقيقة، مما يجعلها مثالية للألعاب الغنية بالسرد وسيناريوهات الترجمة المحلية المعقدة.

النماذج المتاحة

- claude-opus-4-1-20250805 (أحدث الطرازات الرائدة)

- claude-opus-4-20250514

- claude-sonnet-4-20250514

- claude-3-7-sonnet-20250219

- claude-3-5-haiku-20241022 (سريع وفعال)

- claude-3-haiku-20240307

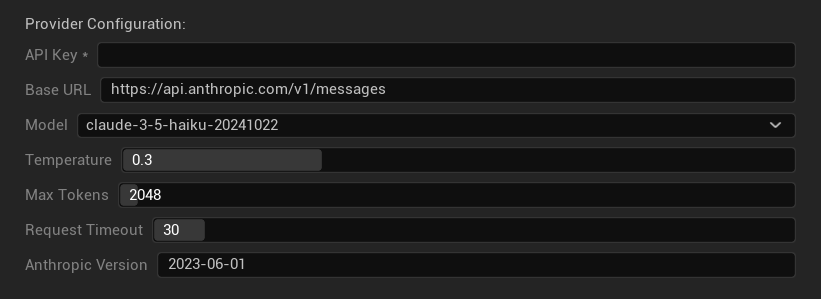

خيارات التهيئة

- مفتاح API: مفتاح Anthropic API الخاص بك (مطلوب)

- عنوان URL الأساسي: نقطة نهاية Claude API

- النموذج: اختر من عائلة نماذج Claude

- درجة الحرارة (Temperature): 0.0-1.0 (يُوصى بـ 0.3)

- الحد الأقصى للرموز (Max Tokens): 1-64,000 رمز

- مهلة الطلب (Request Timeout): 5-300 ثانية

- إصدار Anthropic (Anthropic Version): رأس إصدار API

نقاط القوة

- ✅ وعي استثنائي بالسياق

- ✅ رائع للمحتوى الإبداعي/السردي

- ✅ ميزات أمان قوية

- ✅ قدرات تفصيلية على الاستدلال

- ✅ اتباع ممتاز للتوجيهات

الاعتبارات

- 💰 نموذج تسعير متميز

- 🌐 مطلوب اتصال بالإنترنت

- 📏 تختلف حدود الرموز حسب النموذج

DeepSeek

الأفضل لـ: الترجمة فعالة التكلفة، الإنتاجية العالية، المشاريع التي تراعي الميزانية

تقدم DeepSeek جودة ترجمة تنافسية بسعر يقل بكثير عن مقدمي الخدمات الآخرين، مما يجعلها مثالية لمشاريع الترجمة المحلية واسعة النطاق.

النماذج المتاحة

- deepseek-chat (غرض عام، موصى به)

- deepseek-reasoner (قدرات استدلال معززة)

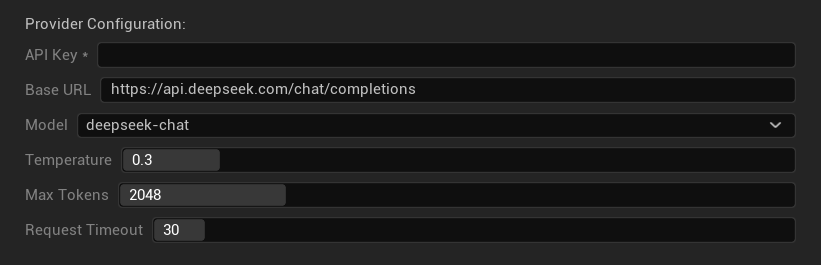

خيارات التهيئة

- مفتاح API: مفتاح DeepSeek API الخاص بك (مطلوب)

- عنوان URL الأساسي: نقطة نهاية DeepSeek API

- النموذج: اختر بين نماذج الدردشة ونماذج المُستدل

- درجة الحرارة (Temperature): 0.0-2.0 (يُوصى بـ 0.3)

- الحد الأقصى للرموز (Max Tokens): 1-8,192 رمز

- مهلة الطلب (Request Timeout): 5-300 ثانية

نقاط القوة

- ✅ فعالة جدًا من حيث التكلفة

- ✅ جودة ترجمة جيدة

- ✅ أوقات استجابة سريعة

- ✅ تهيئة بسيطة

- ✅ حدود معدل عالية

الاعتبارات

- 📏 حدود أقل للرموز المميزة (Tokens)

- 🆕 مزود جديد (سجل أقل)

- 🌐 يتطلب اتصالاً بالإنترنت

Google Gemini

الأفضل لـ: المشاريع متعددة اللغات، الترجمة فعالة التكلفة، التكامل مع نظام Google البيئي

تقدم نماذج Gemini قدرات قوية متعددة اللغات مع أسعار تنافسية وميزات فريدة مثل وضع التفكير لتعزيز الاستدلال.

النماذج المتاحة

- gemini-2.5-pro (أحدث نموذج رئيسي مع التفكير)

- gemini-2.5-flash (سريع، مع دعم التفكير)

- gemini-2.5-flash-lite (نسخة خفيفة الوزن)

- gemini-2.0-flash و gemini-2.0-flash-lite

- gemini-1.5-pro و gemini-1.5-flash

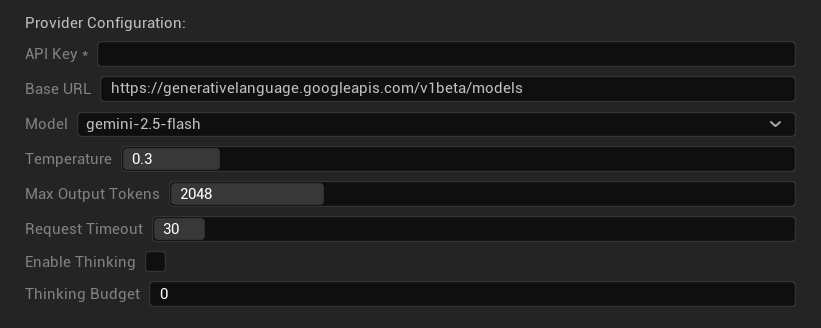

خيارات التهيئة

- مفتاح API: مفتاح Google AI API الخاص بك (مطلوب)

- عنوان URL الأساسي: نقطة نهاية Gemini API

- النموذج: اختر من عائلة نماذج Gemini

- درجة الحرارة (Temperature): 0.0-2.0 (يُوصى بـ 0.3)

- الحد الأقصى للرموز المميزة للمخرجات (Max Output Tokens): 1-8,192 رمزًا مميزًا

- مهلة الطلب (Request Timeout): 5-300 ثانية

- تمكين التفكير (Enable Thinking): تفعيل الاستدلال المعزز لنماذج 2.5

- ميزانية التفكير (Thinking Budget): التحكم في تخصيص الرموز المميزة للتفكير

نقاط القوة

- ✅ دعم قوي متعدد اللغات

- ✅ أسعار تنافسية

- ✅ استدلال متقدم (وضع التفكير)

- ✅ تكامل مع نظام Google البيئي

- ✅ تحديثات منتظمة للنماذج

الاعتبارات

- 🧠 وضع التفكير يزيد من استخدام الرموز المميزة

- 📏 حدود متغيرة للرموز المميزة حسب النموذج

- 🌐 يتطلب اتصالاً بالإنترنت

اختيار المزود المناسب

| المزود | الأفضل لـ | الجودة | التكلفة | الإعداد | الخصوصية |

|---|---|---|---|---|---|

| Ollama | الخصوصية/العمل دون اتصال | متغيرة* | مجاني | متقدم | محلي |

| OpenAI | أعلى جودة | ⭐⭐⭐⭐⭐ | 💰💰💰 | سهل | سحابي |

| Claude | المحتوى الإبداعي | ⭐⭐⭐⭐⭐ | 💰💰💰💰 | سهل | سحابي |

| DeepSeek | المشاريع المحدودة الميزانية | ⭐⭐⭐⭐ | 💰 | سهل | سحابي |

| Gemini | متعدد اللغات | ⭐⭐⭐⭐ | 💰 | سهل | سحابي |

*تختلف جودة Ollama بشكل كبير بناءً على النموذج المحلي المستخدم - بعض النماذج المحلية الحديثة يمكن أن تضاهي أو تتجاوز مزودي الخدمة السحابية.

نصائح لتهيئة المزود

لجميع مزودي الخدمة السحابية:

- قم بتخزين مفاتيح API بأمان ولا تضعها في نظام التحكم بالإصدارات

- ابدأ بإعدادات درجة حرارة محافظة (0.3) للحصول على ترجمات متسقة

- راقب استخدام API الخاص بك والتكاليف

- اختبر مع دفعات صغيرة قبل تشغيل ترجمات كبيرة

بالنسبة لـ Ollama:

- تأكد من وجود ذاكرة وصول عشوائي (RAM) كافية (يُوصى بـ 8GB+ للنماذج الأكبر)

- استخدم تخزين SSD لأداء تحميل نموذج أفضل

- فكر في تسريع GPU لاستدلال أسرع

- اختبر محليًا قبل الاعتماد عليه للترجمات الإنتاجية