Провайдеры переводов

AI Localization Automator поддерживает пять различных AI-провайдеров, каждый с уникальными преимуществами и настройками. Выберите провайдера, который лучше всего соответствует потребностям вашего проекта, бюджету и требованиям к качеству.

Ollama (Локальный ИИ)

Лучше всего подходит для: Чувствительных к конфиденциальности проектов, офлайн-перевода, неограниченного использования

Ollama запускает AI-модели локально на вашем компьютере, обеспечивая полную конфиденциальность и контроль без затрат на API и требований к интернету.

Популярные модели

- llama3.2 (Рекомендуемая модель общего назначения)

- mistral (Эффективная альтернатива)

- codellama (Переводы с учётом кода)

- И множество других моделей от сообщества

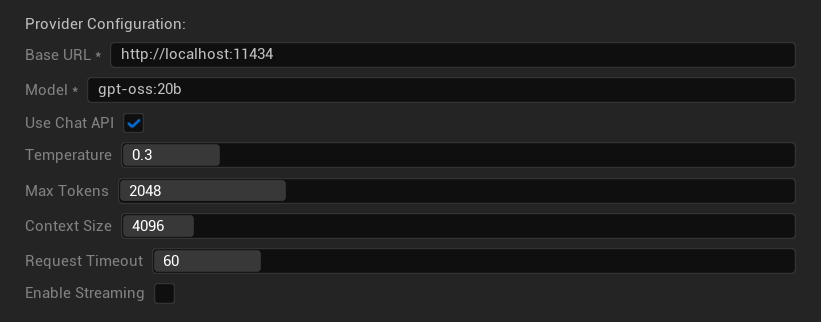

Настройки конфигурации

- Базовый URL: Локальный сервер Ollama (по умолчанию:

http://localhost:11434) - Модель: Название локально установленной модели (обязательно)

- Использовать Chat API: Включите для лучшей обработки диалогов

- Temperature: 0.0-2.0 (рекомендуется 0.3)

- Max Tokens: 1-8,192 токенов

- Context Size: 512-32,768 токенов

- Request Timeout: 10-300 секунд (локальные модели могут быть медленнее)

- Enable Streaming: Для обработки ответов в реальном времени

Преимущества

- ✅ Полная конфиденциальность (данные не покидают ваш компьютер)

- ✅ Нет затрат на API или лимитов использования

- ✅ Работает офлайн

- ✅ Полный контроль над параметрами модели

- ✅ Широкий выбор моделей от сообщества

- ✅ Нет привязки к поставщику

Особенности

- 💻 Требует локальной настройки и мощного оборудования

- ⚡ Как правило, медленнее, чем облачные провайдеры

- 🔧 Требуется более техническая настройка

- 📊 Качество перевода значительно варьируется в зависимости от модели (некоторые могут превосходить облачных провайдеров)

- 💾 Большие требования к хранилищу для моделей

Настройка Ollama

- Установите Ollama: Скачайте с ollama.ai и установите на вашу систему

- Загрузите модели: Используйте

ollama pull llama3.2для загрузки выбранной модели - Запустите сервер: Ollama запускается автоматически, или запустите с помощью

ollama serve - Настройте плагин: Установите базовый URL и название модели в настройках плагина

- Проверьте соединение: Плагин проверит подключение при применении конфигурации

OpenAI

Лучше всего подходит для: Наивысшего общего качества перевода, обширного выбора моделей

OpenAI предоставляет ведущие в отрасли языковые модели через свой API, включая последние GPT-модели и новый формат Responses API.

Доступные модели

- gpt-5 (Последняя флагманская модель)

- gpt-5-mini (Меньший, более быстрый вариант)

- gpt-4.1 и gpt-4.1-mini

- gpt-4o и gpt-4o-mini (Оптимизированные модели)

- o3 и o3-mini (Продвинутое логическое мышление)

- o1 и o1-mini (Предыдущее поколение)

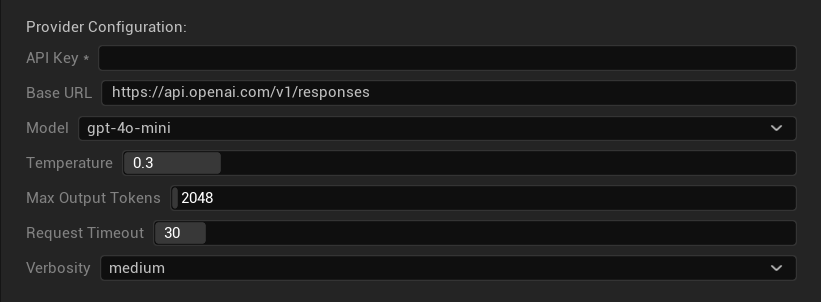

Параметры конфигурации

- API Key: Ваш API-ключ OpenAI (обязательно)

- Base URL: Конечная точка API (по умолчанию: серверы OpenAI)

- Model: Выбор из доступных моделей GPT

- Temperature: 0.0-2.0 (рекомендуется 0.3 для согласованности переводов)

- Max Output Tokens: 1-128,000 токенов

- Request Timeout: 5-300 секунд

- Verbosity: Управление уровнем детализации ответа

Сильные стороны

- ✅ Стабильно высокое качество переводов

- ✅ Отличное понимание контекста

- ✅ Надежное сохранение форматирования

- ✅ Широкая поддержка языков

- ✅ Надежное время безотказной работы API

Особенности

- 💰 Высокая стоимость за запрос

- 🌐 Требуется подключение к интернету

- ⏱️ Лимиты использования в зависимости от тарифа

Anthropic Claude

Лучше всего подходит для: Нюансированных переводов, креативного контента, приложений с акцентом на безопасность

Модели Claude превосходно справляются с пониманием контекста и нюансов, что делает их идеальными для игр с насыщенным повествованием и сложных сценариев локализации.

Доступные модели

- claude-opus-4-1-20250805 (Последняя флагманская)

- claude-opus-4-20250514

- claude-sonnet-4-20250514

- claude-3-7-sonnet-20250219

- claude-3-5-haiku-20241022 (Быстрая и эффективная)

- claude-3-haiku-20240307

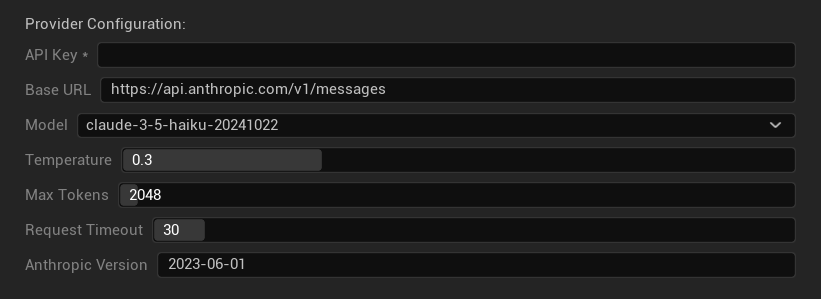

Параметры конфигурации

- API Key: Ваш API-ключ Anthropic (обязательно)

- Base URL: Конечная точка API Claude

- Model: Выбор из семейства моделей Claude

- Temperature: 0.0-1.0 (рекомендуется 0.3)

- Max Tokens: 1-64,000 токенов

- Request Timeout: 5-300 секунд

- Anthropic Version: Заголовок версии API

Сильные стороны

- ✅ Исключительная осведомленность о контексте

- ✅ Отлично подходит для креативного/повествовательного контента

- ✅ Мощные функции безопасности

- ✅ Возможности детального рассуждения

- ✅ Отличное следование инструкциям

Особенности

- 💰 Премиальная модель ценообразования

- 🌐 Требуется подключение к интернету

- 📏 Лимиты токенов варьируются в зависимости от модели

DeepSeek

Лучше всего подходит для: Экономически эффективного перевода, высокой пропускной способности, бюджетных проектов

DeepSeek предлагает конкурентоспособное качество перевода за долю стоимости других провайдеров, что делает его идеальным для крупномасштабных проектов локализации.

Доступные модели

- deepseek-chat (Универсальная, рекомендуется)

- deepseek-reasoner (Расширенные возможности рассуждения)

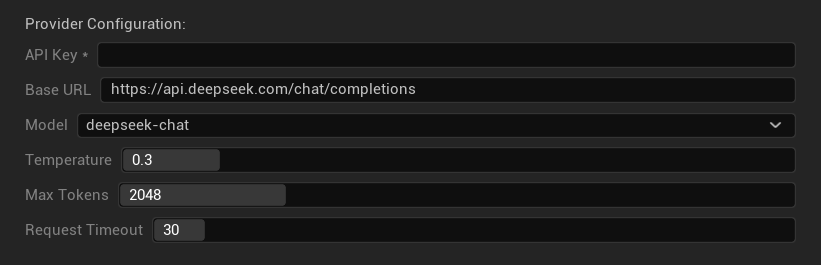

Параметры конфигурации

- API Key: Ваш API-ключ DeepSeek (обязательно)

- Base URL: Конечная точка API DeepSeek

- Model: Выбор между чат-моделями и моделями для рассуждений

- Temperature: 0.0-2.0 (рекомендуется 0.3)

- Max Tokens: 1-8,192 токенов

- Request Timeout: 5-300 секунд

Сильные стороны

- ✅ Очень экономически эффективный

- ✅ Хорошее качество перевода

- ✅ Быстрое время отклика

- ✅ Простая конфигурация

- ✅ Высокие лимиты частоты запросов

Соображения

- 📏 Более низкие лимиты токенов

- 🆕 Более новый провайдер (меньше опыта работы)

- 🌐 Требуется подключение к интернету

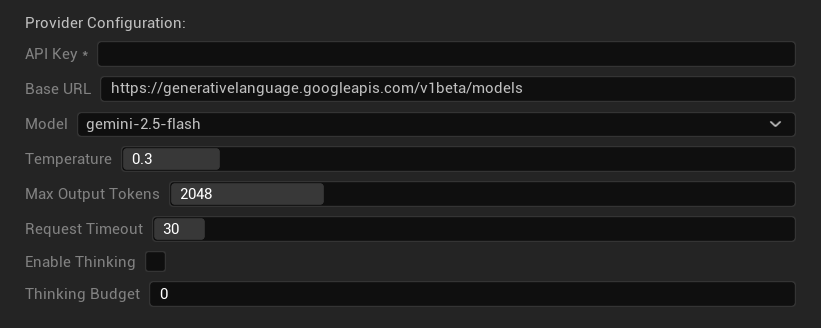

Google Gemini

Лучше всего подходит для: Многоязычных проектов, экономически эффективного перевода, интеграции с экосистемой Google

Модели Gemini предлагают мощные многоязычные возможности с конкурентоспособными ценами и уникальными функциями, такими как режим мышления для улучшенного логического вывода.

Доступные модели

- gemini-2.5-pro (Новейший флагман с мышлением)

- gemini-2.5-flash (Быстрый, с поддержкой мышления)

- gemini-2.5-flash-lite (Облегченный вариант)

- gemini-2.0-flash и gemini-2.0-flash-lite

- gemini-1.5-pro и gemini-1.5-flash

Параметры конфигурации

- API Key: Ваш ключ Google AI API (обязателен)

- Base URL: Конечная точка API Gemini

- Model: Выбор из семейства моделей Gemini

- Temperature: 0.0-2.0 (рекомендуется 0.3)

- Max Output Tokens: 1-8,192 токенов

- Request Timeout: 5-300 секунд

- Enable Thinking: Активировать улучшенный логический вывод для моделей 2.5

- Thinking Budget: Контроль распределения токенов для мышления

Сильные стороны

- ✅ Мощная поддержка многоязычности

- ✅ Конкурентоспособные цены

- ✅ Продвинутый логический вывод (режим мышления)

- ✅ Интеграция с экосистемой Google

- ✅ Регулярные обновления моделей

Соображения

- 🧠 Режим мышления увеличивает использование токенов

- 📏 Переменные лимиты токенов в зависимости от модели

- 🌐 Требуется подключение к интернету

Выбор подходящего провайдера

| Провайдер | Лучше всего подходит для | Качество | Стоимость | Настройка | Конфиденциальность |

|---|---|---|---|---|---|

| Ollama | Конфиденциальность/офлайн | Переменная* | Бесплатно | Продвинутая | Локальная |

| OpenAI | Наивысшее качество | ⭐⭐⭐⭐⭐ | 💰💰💰 | Легкая | Облачная |

| Claude | Креативный контент | ⭐⭐⭐⭐⭐ | 💰💰💰💰 | Легкая | Облачная |

| DeepSeek | Бюджетные проекты | ⭐⭐⭐⭐ | 💰 | Легкая | Облачная |

| Gemini | Многоязычность | ⭐⭐⭐⭐ | 💰 | Легкая | Облачная |

*Качество для Ollama значительно варьируется в зависимости от используемой локальной модели — некоторые современные локальные модели могут соответствовать или превосходить облачных провайдеров.

Советы по настройке провайдера

Для всех облачных провайдеров:

- Храните API-ключи безопасно и не добавляйте их в систему контроля версий

- Начинайте с консервативных настроек температуры (0.3) для стабильных переводов

- Отслеживайте использование API и расходы

- Тестируйте на небольших пакетах перед запуском больших переводов

Для Ollama:

- Обеспечьте достаточный объем ОЗУ (рекомендуется 8 ГБ+ для больших моделей)

- Используйте SSD-накопитель для лучшей производительности загрузки моделей

- Рассмотрите использование GPU для ускорения вывода

- Тестируйте локально перед использованием для продакшен-переводов