Pixel Streaming Audio Capture

Pixel Streaming is a plugin for Unreal Engine that streams rendered frames and synchronizes input/output via WebRTC. The application runs on the server side, whereas the client side handles rendering and user interaction. For more details on Pixel Streaming and setup, refer to Pixel Streaming Documentation.

Pixel Streaming vs Pixel Streaming 2

This plugin supports both Pixel Streaming versions available in Unreal Engine:

- Pixel Streaming - The original plugin, available since UE 5.2 and still actively used in current engine versions

- Pixel Streaming 2 - Introduced in UE 5.5 as a next-generation implementation with an improved internal architecture. Learn more about Pixel Streaming 2

Both versions are fully supported and available in the latest Unreal Engine releases. Choose the version that matches your project's Pixel Streaming setup.

The API for both versions is identical, with the only difference being that Pixel Streaming 2 classes and functions include "2" in their names (e.g., UPixelStreamingCapturableSoundWave vs UPixelStreaming2CapturableSoundWave).

Compatibility

This solution works with:

- Official Pixel Streaming infrastructure (Epic Games reference implementation)

- Third-party Pixel Streaming providers including:

- Vagon.io

- Arcane Mirage

- Eagle 3D Streaming

- Other WebRTC-based streaming solutions

- Operating systems: Windows and Linux servers

The implementation has been tested across these environments and functions correctly regardless of the Pixel Streaming hosting solution used.

Extension Plugin Installation

This feature is provided as an extension to the Runtime Audio Importer plugin. To use it, you need to:

- Ensure the Runtime Audio Importer plugin is already installed in your project

- Download the extension plugin for your Pixel Streaming version:

- Extract the folder from the downloaded archive into the

Pluginsfolder of your project (create this folder if it doesn't exist) - Rebuild your project (this extension requires a C++ project)

- These extensions are provided as source code and require a C++ project to use

- Pixel Streaming extension: Supported in UE 5.2 and later

- Pixel Streaming 2 extension: Supported in UE 5.5 and later

- For more information on how to build plugins manually, see the Building Plugins tutorial

Overview

The Pixel Streaming Capturable Sound Wave extends the standard Capturable Sound Wave to allow capturing audio directly from Pixel Streaming clients' microphones. This feature enables you to:

- Capture audio from browsers connected via Pixel Streaming

- Process audio from specific players/peers

- Implement voice chat, voice commands, or audio recording from remote users

Basic Usage

Creating a Pixel Streaming Capturable Sound Wave

First, you need to create a Pixel Streaming Capturable Sound Wave object:

- Pixel Streaming

- Pixel Streaming 2

- Blueprint

- C++

![]()

PixelStreamingSoundWave = UPixelStreamingCapturableSoundWave::CreatePixelStreamingCapturableSoundWave();

- Blueprint

- C++

Use the Create Pixel Streaming 2 Capturable Sound Wave node (same as Pixel Streaming but with "2" in the name)

PixelStreamingSoundWave = UPixelStreaming2CapturableSoundWave::CreatePixelStreaming2CapturableSoundWave();

You should treat the Pixel Streaming Capturable Sound Wave as a strong reference to prevent premature destruction (e.g., by assigning it to a separate variable in Blueprints or using UPROPERTY() in C++).

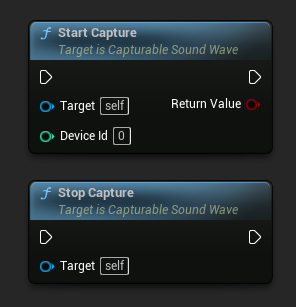

Starting and Stopping Capture

You can start and stop the audio capture with simple function calls:

- Pixel Streaming

- Pixel Streaming 2

- Blueprint

- C++

// Assuming PixelStreamingSoundWave is a reference to a UPixelStreamingCapturableSoundWave object

// Start capturing audio (the device ID parameter is ignored for Pixel Streaming)

PixelStreamingSoundWave->StartCapture(0);

// Stop capturing audio

PixelStreamingSoundWave->StopCapture();

- Blueprint

- C++

Use the same Start Capture and Stop Capture nodes with your Pixel Streaming 2 Capturable Sound Wave

// Assuming PixelStreamingSoundWave is a reference to a UPixelStreaming2CapturableSoundWave object

// Start capturing audio (the device ID parameter is ignored for Pixel Streaming 2)

PixelStreamingSoundWave->StartCapture(0);

// Stop capturing audio

PixelStreamingSoundWave->StopCapture();

The DeviceId parameter in StartCapture is ignored for Pixel Streaming Capturable Sound Waves, as the capture source is determined automatically or by the player info you set.

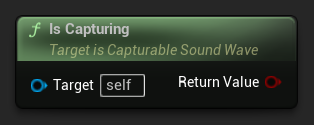

Checking Capture Status

You can check if the sound wave is currently capturing audio:

- Pixel Streaming

- Pixel Streaming 2

- Blueprint

- C++

// Assuming PixelStreamingSoundWave is a reference to a UPixelStreamingCapturableSoundWave object

bool bIsCapturing = PixelStreamingSoundWave->IsCapturing();

- Blueprint

- C++

Use the same Is Capturing node with your Pixel Streaming 2 Capturable Sound Wave

// Assuming PixelStreamingSoundWave is a reference to a UPixelStreaming2CapturableSoundWave object

bool bIsCapturing = PixelStreamingSoundWave->IsCapturing();

Complete Example

Here's a complete example of how to set up Pixel Streaming audio capture:

- Pixel Streaming

- Pixel Streaming 2

- Blueprint

- C++

![]()

This is a basic code example for capturing audio data from a Pixel Streaming client.

The example uses the CapturePixelStreamingAudioExample function located in the UPixelStreamingAudioExample class within the EXAMPLEMODULE module.

To successfully run the example, make sure to add both the RuntimeAudioImporter and PixelStreaming modules to either PublicDependencyModuleNames or PrivateDependencyModuleNames in the .Build.cs file, as well as to your project's .uproject file.

#pragma once

#include "CoreMinimal.h"

#include "UObject/Object.h"

#include "PixelStreamingAudioExample.generated.h"

UCLASS(BlueprintType)

class EXAMPLEMODULE_API UPixelStreamingAudioExample : public UObject

{

GENERATED_BODY()

public:

UFUNCTION(BlueprintCallable)

void CapturePixelStreamingAudioExample();

private:

// Keep a strong reference to prevent garbage collection

UPROPERTY()

class UPixelStreamingCapturableSoundWave* PixelStreamingSoundWave;

};

#include "PixelStreamingAudioExample.h"

#include "Sound/PixelStreamingCapturableSoundWave.h"

#include "Kismet/GameplayStatics.h"

void UPixelStreamingAudioExample::CapturePixelStreamingAudioExample()

{

// Create a Pixel Streaming Capturable Sound Wave

PixelStreamingSoundWave = UPixelStreamingCapturableSoundWave::CreatePixelStreamingCapturableSoundWave();

// Start capturing audio

PixelStreamingSoundWave->StartCapture(0);

// Play the sound wave to hear the captured audio

UGameplayStatics::PlaySound2D(GetWorld(), PixelStreamingSoundWave);

// Set up a timer to stop capture after 30 seconds (just for demonstration)

FTimerHandle TimerHandle;

GetWorld()->GetTimerManager().SetTimer(TimerHandle, [this]()

{

PixelStreamingSoundWave->StopCapture();

}, 30.0f, false);

}

- Blueprint

- C++

The Blueprint setup is identical to Pixel Streaming, but use the Create Pixel Streaming 2 Capturable Sound Wave node instead

This is a basic code example for capturing audio data from a Pixel Streaming 2 client.

The example uses the CapturePixelStreaming2AudioExample function located in the UPixelStreaming2AudioExample class within the EXAMPLEMODULE module.

To successfully run the example, make sure to add both the RuntimeAudioImporter and PixelStreaming2 modules to either PublicDependencyModuleNames or PrivateDependencyModuleNames in the .Build.cs file, as well as to your project's .uproject file.

#pragma once

#include "CoreMinimal.h"

#include "UObject/Object.h"

#include "PixelStreaming2AudioExample.generated.h"

UCLASS(BlueprintType)

class EXAMPLEMODULE_API UPixelStreaming2AudioExample : public UObject

{

GENERATED_BODY()

public:

UFUNCTION(BlueprintCallable)

void CapturePixelStreaming2AudioExample();

private:

// Keep a strong reference to prevent garbage collection

UPROPERTY()

class UPixelStreaming2CapturableSoundWave* PixelStreamingSoundWave;

};

#include "PixelStreaming2AudioExample.h"

#include "Sound/PixelStreaming2CapturableSoundWave.h"

#include "Kismet/GameplayStatics.h"

void UPixelStreaming2AudioExample::CapturePixelStreaming2AudioExample()

{

// Create a Pixel Streaming 2 Capturable Sound Wave

PixelStreamingSoundWave = UPixelStreaming2CapturableSoundWave::CreatePixelStreaming2CapturableSoundWave();

// Start capturing audio

PixelStreamingSoundWave->StartCapture(0);

// Play the sound wave to hear the captured audio

UGameplayStatics::PlaySound2D(GetWorld(), PixelStreamingSoundWave);

// Set up a timer to stop capture after 30 seconds (just for demonstration)

FTimerHandle TimerHandle;

GetWorld()->GetTimerManager().SetTimer(TimerHandle, [this]()

{

PixelStreamingSoundWave->StopCapture();

}, 30.0f, false);

}

Working with Multiple Pixel Streaming Players

In scenarios where you have multiple Pixel Streaming clients connected simultaneously, you may need to capture audio from specific players. The following features help you manage this.

Getting Available Pixel Streaming Players

To identify which Pixel Streaming players are connected:

- Pixel Streaming

- Pixel Streaming 2

- Blueprint

- C++

![]()

UPixelStreamingCapturableSoundWave::GetAvailablePixelStreamingPlayers(FOnGetAvailablePixelStreamingPlayersResultNative::CreateWeakLambda(this, [](const TArray<FPixelStreamingPlayerInfo_RAI>& AvailablePlayers)

{

// Handle the list of available players

for (const FPixelStreamingPlayerInfo_RAI& PlayerInfo : AvailablePlayers)

{

UE_LOG(LogTemp, Log, TEXT("Available player: %s on streamer: %s"), *PlayerInfo.PlayerId, *PlayerInfo.StreamerId);

}

}));

- Blueprint

- C++

Use the Get Available Pixel Streaming 2 Players node

UPixelStreaming2CapturableSoundWave::GetAvailablePixelStreaming2Players(FOnGetAvailablePixelStreaming2PlayersResultNative::CreateWeakLambda(this, [](const TArray<FPixelStreaming2PlayerInfo_RAI>& AvailablePlayers)

{

// Handle the list of available players

for (const FPixelStreaming2PlayerInfo_RAI& PlayerInfo : AvailablePlayers)

{

UE_LOG(LogTemp, Log, TEXT("Available player: %s on streamer: %s"), *PlayerInfo.PlayerId, *PlayerInfo.StreamerId);

}

}));

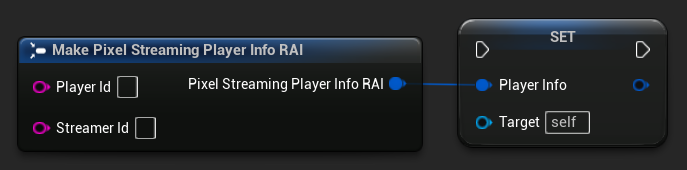

Setting the Player to Capture From

When you need to capture from a specific player:

- Pixel Streaming

- Pixel Streaming 2

- Blueprint

- C++

// Assuming PixelStreamingSoundWave is a reference to a UPixelStreamingCapturableSoundWave object

// and PlayerInfo is a FPixelStreamingPlayerInfo_RAI object from GetAvailablePixelStreamingPlayers

PixelStreamingSoundWave->PlayerInfo = PlayerInfo;

- Blueprint

- C++

Use the Set Player To Capture From node with your Pixel Streaming 2 Capturable Sound Wave

// Assuming PixelStreamingSoundWave is a reference to a UPixelStreaming2CapturableSoundWave object

// and PlayerInfo is a FPixelStreaming2PlayerInfo_RAI object from GetAvailablePixelStreaming2Players

PixelStreamingSoundWave->PlayerInfo = PlayerInfo;

If you leave the Player ID empty, the sound wave will automatically listen to the first available player that connects. This is the default behavior and works well for single-player scenarios.

Common Use Cases

Voice Chat Implementation

You can use Pixel Streaming Capturable Sound Waves to implement voice chat between remote users and local players:

- Create a Pixel Streaming Capturable Sound Wave for each connected player

- Set up a system to manage which players are currently speaking

- Use the Voice Activity Detection system to detect when users are speaking

- Spatialize the audio using Unreal Engine's audio system if needed

Voice Commands with Speech Recognition

You can implement voice command recognition for remote users by combining this feature with the Runtime Speech Recognizer plugin:

- Capture audio from Pixel Streaming clients using Pixel Streaming Capturable Sound Wave

- Feed the captured audio directly to the Runtime Speech Recognizer

- Process the recognized text in your game logic

Simply replace the standard Capturable Sound Wave with a Pixel Streaming Capturable Sound Wave (or Pixel Streaming 2 Capturable Sound Wave) in the Runtime Speech Recognizer examples, and it will work seamlessly with Pixel Streaming audio input.

Recording Remote User Audio

You can record audio from remote users for later playback:

- Capture audio using Pixel Streaming Capturable Sound Wave

- Export the captured audio to a file using Export Audio

- Save the file for later use or analysis